Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Welcome to the world of Kubernetes, your go-to buddy for simplifying container management. Born from Google’s tech brilliance and now fostered by the Cloud Native Computing Foundation (CNCF), Kubernetes is your ticket to hassle-free container orchestration. Written in Golang, it’s the magic wand that automates deploying, scaling, and balancing containers. Whether you’re in the cloud or cozy at home, Kubernetes is your adaptable sidekick, thanks to its huge fanbase.

Kubernetes, often abbreviated as K8s, is a powerful open-source container orchestration platform. At its core, it is designed to automate the deployment, scaling, and management of containerized applications.

Kubernetes provides a robust framework for deploying and managing containers at scale. It simplifies complex tasks associated with deploying and maintaining applications, offering features such as automated load balancing, scaling, and rolling updates. The platform also enhances the resilience and reliability of applications by ensuring high availability and fault tolerance.

With Kubernetes, users can define how their applications should run, scale, and interact with other services. It abstracts the underlying infrastructure, making it easier to manage applications in dynamic and diverse environments, including on-premises data centers and various cloud providers.

In summary, Kubernetes acts as a container orchestration tool, streamlining the deployment and management of containerized applications while providing features for scalability, predictability, and high availability.

Let’s explore the significance of Kubernetes by taking a trip back in time.

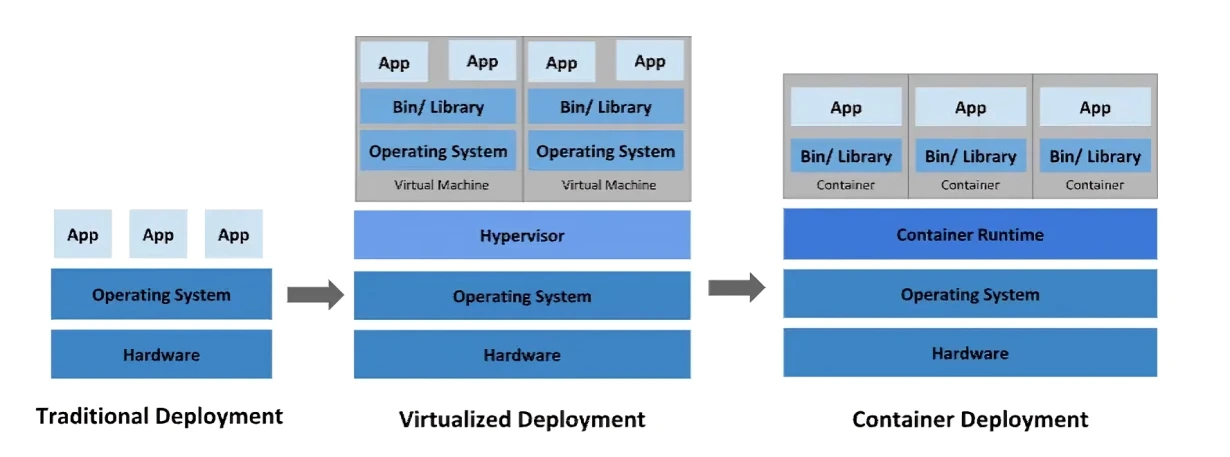

Traditional Deployments:

In the old days, people used to run applications on physical servers, but it had a problem. If you had multiple apps on one server, one could hog all the resources, leaving the others struggling. The solution was to put each app on its own server, but that got too expensive.

Virtualized Deployments:

Then came virtualization. It let you run several Virtual Machines (VMs) on one physical server, keeping each app separate and secure. This meant better use of server resources, easier scalability, and cost savings. Each VM was like a full computer, with its own operating system.

Container deployment:

Now, we have containers. They’re like VMs but lighter. They share the same operating system but keep everything else separate. This makes them portable and flexible across different systems. Containers are a modern way to run applications, providing a balance between isolation and efficiency.

The API Server acts as the hub of communication for the entire Kubernetes cluster. Think of it as the control center where you send your requests and receive information about the cluster’s current state. It manages the entire cluster by validating and processing the requests and ensuring a consistent view of the system.

The Scheduler is like a smart coordinator that decides where to deploy your applications’ workloads. It evaluates factors like resource availability and constraints to find the best-suited node for your tasks. Essentially, it ensures optimal distribution of work across the cluster.

The Controller Manager is responsible for overseeing and regulating the desired state of the cluster. It constantly works to ensure that the system aligns with your defined configurations. Controllers, like the Node Controller or Replication Controller, are part of this component and play specific roles in maintaining the desired state.

This component acts as an extension of the Controller Manager, focusing on cloud-specific functionalities. It interacts with cloud provider APIs to manage resources native to the chosen cloud environment. For instance, it can handle load balancers or storage systems specific to your cloud provider.

The Kubelet is your node’s watchful guardian. It ensures that containers are running as expected on each node by communicating with the API Server. It takes care of tasks like starting, stopping, and maintaining application containers based on the instructions it receives from the control plane.

Kube-Proxy is like the traffic manager for your services. It manages network communication, ensuring that your containers can talk to each other and the outside world. It handles tasks like load balancing, making your services accessible and reliable.

Pods are the fundamental units of deployment in Kubernetes. They encapsulate one or more containers, along with shared storage and network resources. Think of a Pod as a logical host for your applications, where containers within the Pod can communicate easily and share resources.

The Container Runtime is the engine that brings your containers to life. It’s responsible for pulling container images, running containers, and managing their lifecycle. Popular runtimes include Docker and containerd, providing the necessary environment for your applications to thrive within the containers.

Kubernetes is a cool project that helps you run big, reliable applications using containers on a super smart platform. At first, it might seem a bit tricky with its fancy architecture, but trust me, it’s worth it. The strength, flexibility, and cool features it offers are unbeatable in the world of open-source and building stuff for the cloud. Once you get the hang of how the basic pieces fit together, you can create systems that make the most of what Kubernetes can do. This lets you handle big projects smoothly and build awesome applications for the cloud.

1. What is Kubernetes, and why is it important for applications?

A: Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications. It ensures applications run consistently across various environments.

2. How does Kubernetes improve application scalability and performance?

A: Kubernetes allows you to scale applications effortlessly by adding or removing instances. Its intelligent orchestration ensures optimal performance and resource utilization.

3. What are the key features of Kubernetes that benefit developers and operations teams?

A: Kubernetes provides features like automatic load balancing, self-healing, and rolling updates. These simplify application development, deployment, and maintenance tasks.

4. Can you explain the basic architecture of Kubernetes in simple terms?

A: Kubernetes has a master node that manages worker nodes. The master node makes decisions about where to run applications, while worker nodes execute these applications.

5. What advantages does Kubernetes offer for containerized application management?

A: Kubernetes streamlines the deployment process, ensures consistent application behavior, and provides robust tools for monitoring, scaling, and maintaining containerized applications.